Years ago, I remember taking a tour of what was then one of those newfangled “innovation labs”.

A hive of Design Thinking, it was crawling with serious young people in jeans and t-shirts scribbling on walls and rearranging herds of post-it notes.

In an otherwise old-fashioned financial services organisation, it was an impressive tilt towards modernisation and true customer centricity (beyond the warm and fuzzy TV commercials).

After our guide had finished explaining this brave new world to the group, one of us asked him to share a project he’d been working on. He proudly explained how the year prior, the lab had applied the progressive methodology to the development of a new product which had, finally, launched.

Which begged the next question… How many new customers did it sign up? His straight-faced answer: Seven.

Seven!

For a bank with literally millions of customers, this was astounding. And he didn’t seem all that bothered by it. The apparent solution was to go back to the drawing board and try again.

While still doing the math in my head to calculate the negative return on investment, I stumbled upon the myth of The Yellow Walkman. I neither confirm nor deny its veracity, but Alexander Cowan recounts it as follows in his article Yellow Walkman Data & the Art of Customer Discovery:

Sony’s conducting a focus group for a yellow ‘sport’ Walkman. After assembling their ‘man/woman on the street’ contingent, they ask them ‘Hey, how do you like this yellow Walkman?’ The reception’s great. ‘I love that yellow Walkman – it’s so sporty!’ ‘Man, would I rather I have a sweet yellow Walkman instead of a boring old black one.’

While everyone’s clinking glasses, someone had the insight to offer the participants a Walkman on their way out. They can choose either the traditional black edition or the sporty new yellow edition – there are two piles of Walkmans on two tables on the way out. Everyone takes a black Walkman.

It’s an old story, but its message remains relevant today. Because humans are terrible at predicting their own behaviour.

You see, talk is cheap. Everyone has great ideas… when someone else has to implement them. And if you ask someone point blank if they want something, nine times out of ten they’ll say yes. Then they never use it and you’re left carrying the can wondering where you went wrong.

We see this kind of thing all the time in workplace learning and development. Someone in the business will demand we build an online course, which no one will launch; or a manager will pull a capability out of thin air, oblivious to the real needs of their team.

As Cowan suggests, this can be mitigated by thoughtful questioning that avoids the solution-first trap. And of course the point of the MVP approach that’s championed by Design Thinking minimises any losses by failing fast.

But we can do something else before we get to that point: validate.

In the yellow Walkman example, Cowan offers:

Sony’s product designer mocks up several colors of Walkman and puts together some kind of an ordering page with the options. Focus group subjects (or just online visitors) are allowed to pre-order what they want. This gets you the same result without having to actually produce a whole bunch of yellow (or whatever) Walkmans.

In the L&D context, I suggest complementing our TNA consultations with assessments. So the team needs to develop x capability? Test it. They’re all over y competency? Test it.

And it needn’t be expensive nor onerous. A micro-assessment approach should be sufficient to expose the blindspots.

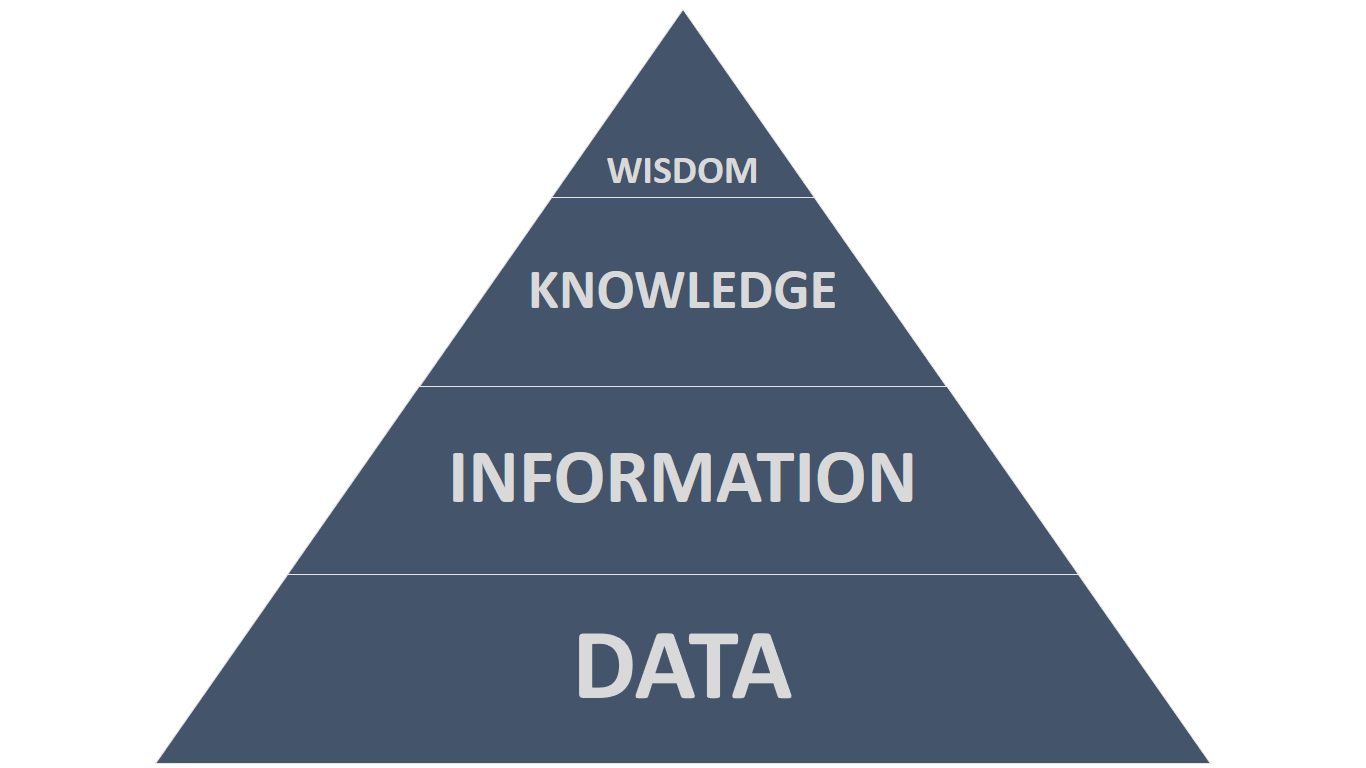

By validating your qualitative data with quantitative data, you’re building extra confidence into your bet and maximising its probability of success.

Lest it sink like a yellow submarine.