Evidence-based practice is the darling of Learning & Development geeks.

And with good reason. Amongst all the myths and barrow pushing, not to mention the myriad ways to approach any given problem, empiricism injects a sorely needed dose of confidence into what we do.

Friends of my blog will already know that my undergraduate degree was in science, and although I graduated a thousand years ago, it forever equipped me with a lens through which I see the world. Suffice to say my search for statistical significance is serious.

But it is through this same lens that I also see the limitations of research. Hence I urge caution when using it to inform our decisions.

For instance, I recently called out the “captive audience” that characterises many of the experiments undertaken in the educational domain. Subjects who are compelled to participate in these activities may not behave in the same manner as when they are not; which could, for example, complicate your informal learning strategy.

Hence studies undertaken in the K12 or higher education sectors may not translate so well in the corporate sector, where the dynamics of the environment are different. But even when a study is set among more authentic or relevant conditions, the results are unlikely to be universally representative. By design it locks down the variables, making it challenging to compare apples to apples.

In short, all organisations are different. They have different budgets, systems, policies, processes, and most consequentially, cultures. So if a study or even a meta-analysis demonstrates the efficacy of the flipped classroom approach to workplace training, it mightn’t replicate at your company because no one does the pre-work.

Essentially it’s a question of probability. Subscribing to the research theoretically shifts the odds of success in your favour, but it’s not a sure thing. You never know where along the normal distribution you’re gonna be.

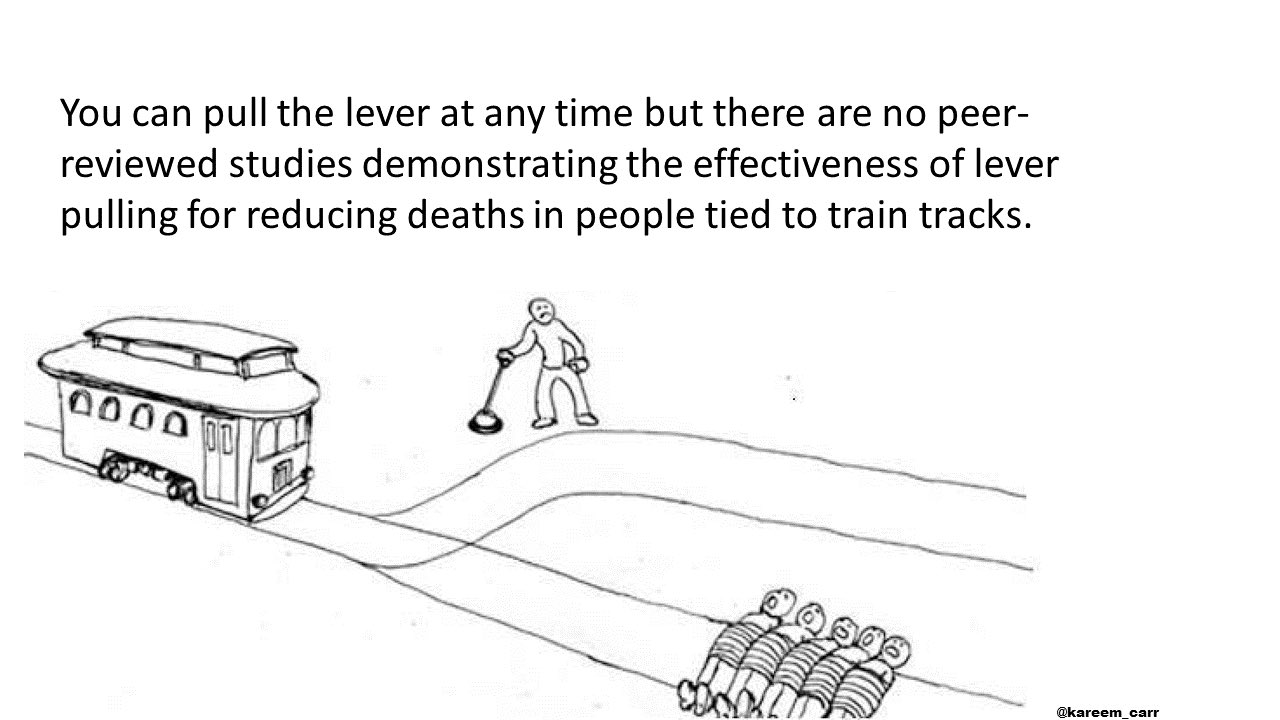

My argument thus far has assumed that quality research exists. Yet despite the plethora of journals and books and reports and articles, we’ve all struggled to find coverage of that one specific topic. Perhaps it’s behind a paywall, or the experimental design is flawed, or the study simply hasn’t been done.

So while we may agree in principle that evidence-based practice is imperative, the reality of business dictates that in fact much of that evidence is lacking. In its absence, we have no choice but to rely on logic, experience, and sometimes gut instinct; informed by observation, conversation, and innovation.

In your capacity as an L&D professional, you need to run your own experiments within the construct of your own organisation. By all means take your cues from the available research, but do so with a critical mindset, and fill in the gaps with action.

Find out what works in your world.